25 Jun 2015 Building an automatic environment using Consul and Docker – part 1

Service Discovery became an important component to most environments who can’t be satisfied with static and manual configuration of components.

Modern service discovery tools can provide a way for components in production environments to find and communicate with each other, so that it allows the service to scale automatically. In a simple way each process or service has to register itself with service directory and provide a set of information like the service IP and Port, or its role in the cluster (master or slave).

This is a series of blog posts for building a distributed application environment, in this post I will give an example for creating a simple environment that uses a popular service discovery solution using set of tools and libraries to build an automatic environment, most common tools used in this example:

- Consul.

- Consul-template.

- Docker.

- python-consul library.

Introducing Consul

Consul is a highly available and distributed service discovery solution created by HashiCorp that provides a way for processes and services to register themselves and be aware of other components and services in a distributed environment via DNS or HTTP interfaces.

In addition to service discovery, Consul provides several key features like offering a distributed Key/Value store which can be a powerful solution for shared configuration.

In addition to the Key/Value store, Consul provides a way to define health checks for the registered services in the service catalog, the health check can take several forms like HTTP, script, or TTL health check.

Consul official documentation explains how to download and install Consul on your server, but in this example i will use Docker to provision Consul containers for more portability.

I used Jeff Lindsay’s Consul Docker image which is only 50MB based on busybox image, and have special features like the run:cmd option which simplify the running command of the container.

Starting Single Consul Server

To start a single Consul server using Docker:

[code]$ sudo docker run -p 8400:8400 -p 8500:8500 \

-p 8600:53/udp -h consul_s progrium/consul -server -bootstrap[/code]

This command will run a Docker Consul container as a bootstrapped server which means it will initiate a cluster setup with only one server, also it will map the ports 8500 for the HTTP API and 8600 for the DNS endpoint.

you can use HTTP or DNS to check for the availability of the server, to query the consul agent:

[code]$ curl localhost:8500/v1/catalog/nodes

[{"Node":"node1","Address":"172.17.0.28"}][/code]

This means that only one node is registered as Consul agent with private ip “172.17.0.28”, To use the DNS end point use:

[code]

$ dig @0.0.0.0 -p 8600 node1.node.consul

…

;; ANSWER SECTION:

node1.node.consul. 0 IN A 172.17.0.28

…

[/code]

Consul can act as a local DNS server for your environment which can resolve the service names to the corresponding IP, Consul also provides a SRV DNS records which define the location, IP, and the port of the service.

Later in the post we will see how to use the Consul DNS interface to make a name lookups for the services that registered with consul.

Consul comes with an Open-source Web UI that can display and manage the service, agents, and health checks, the Web UI is already included in the Docker image, to start consul with Web UI:

[code]$ docker run -d –name consul -p 8400:8400 -p 8500:8500 \

-p 8600:53/udp -h consul_s progrium/consul -server -bootstrap \

-ui-dir /ui[/code]

Setting Up Consul Cluster

Consul is designed to support multiple datacenters and within each datacenter, consul can run a highly available cluster, where its data can be replicated to several consul servers.

Consul’s documentation recommends 3 to 5 servers to avoid failure and data loss. However, Consul can scale to hundreds of servers in multiple datacenters.

To start a consul cluster, we will start 3 consul agents and will map more ports than usual to allow communication between cluster nodes:

[code]hussein@consul-server1:~$ sudo docker run -d -h consul_s1

-p 8300:8300 \

-p 8301:8301 \

-p 8301:8301/udp \

-p 8302:8302 \

-p 8302:8302/udp \

-p 8400:8400 \

-p 8500:8500 \

-p 53:53/udp \

–name consul_s1 progrium/consul \

-server -advertise <public-ip> -bootstrap-expect 3[/code]

Then on other two servers, run the following:

[code]hussein@consul-server2:~$ sudo docker run -d -h consul_s2 \

-p 8300:8300 \

-p 8301:8301 \

-p 8301:8301/udp \

-p 8302:8302 \

-p 8302:8302/udp \

-p 8400:8400 \

-p 8500:8500 \

-p 53:53/udp \

–name consul_s2 progrium/consul \

-server -advertise <public-ip> -join <server1-ip>[/code]

To monitor the cluster status, run docker logs -f on the docker container, you should see output like this:

[code highlight=”3,8,9,14,21-23″]

2015/05/12 22:01:53 [ERR] agent: failed to sync remote state: No cluster leader

2015/05/12 22:02:17 [INFO] serf: EventMemberJoin: consuls2 46.101.58.142

2015/05/12 22:02:17 [INFO] consul: adding server consuls2 (Addr: 46.101.58.142:8300) (DC: dc1)

2015/05/12 22:02:21 [ERR] agent: failed to sync remote state: No cluster leader

2015/05/12 22:05:12 [ERR] agent: failed to sync remote state: No cluster leader

….

2015/05/12 22:05:13 [INFO] serf: EventMemberJoin: consuls3 46.101.42.56

2015/05/12 22:05:13 [INFO] consul: adding server consuls3 (Addr: 46.101.42.56:8300) (DC: dc1)

2015/05/12 22:05:13 [INFO] consul: Attempting bootstrap with nodes:

[178.62.122.178:8300 46.101.58.142:8300 46.101.42.56:8300]

2015/05/12 22:05:13 [WARN] raft: Heartbeat timeout reached, starting election

2015/05/12 22:05:13 [INFO] raft: Node at 178.62.122.178:8300 [Candidate] entering Candidate state

2015/05/12 22:05:13 [WARN] raft: Remote peer 46.101.58.142:8300 does not have local node 178.62.122.178:8300 as a peer

2015/05/12 22:05:13 [INFO] raft: Election won. Tally: 2

2015/05/12 22:05:13 [INFO] raft: Node at 178.62.122.178:8300 [Leader] entering Leader state

2015/05/12 22:05:13 [INFO] consul: cluster leadership acquired

2015/05/12 22:05:13 [INFO] consul: New leader elected: consuls1

2015/05/12 22:05:13 [WARN] raft: Remote peer 46.101.42.56:8300 does not have local node 178.62.122.178:8300 as a peer

2015/05/12 22:05:13 [INFO] raft: pipelining replication to peer 46.101.58.142:8300

2015/05/12 22:05:13 [INFO] raft: pipelining replication to peer 46.101.42.56:8300

2015/05/12 22:05:13 [INFO] consul: member ‘consuls3’ joined, marking health alive

2015/05/12 22:05:13 [INFO] consul: member ‘consuls1’ joined, marking health alive

2015/05/12 22:05:13 [INFO] consul: member ‘consuls2’ joined, marking health alive

[/code]

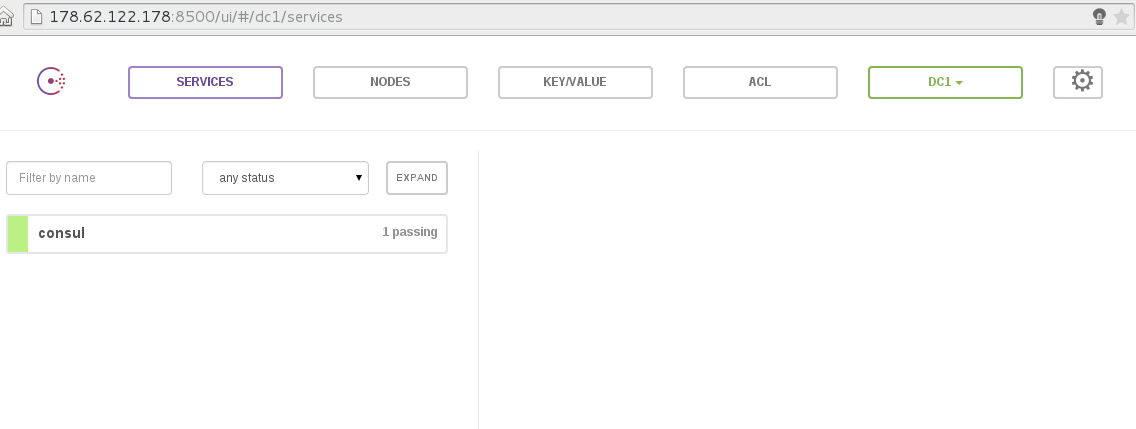

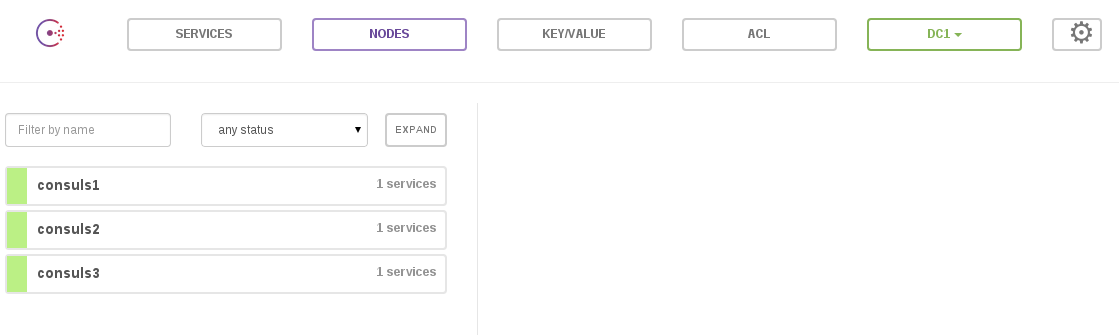

And when trying the Web UI:

Building A Python Application with python-consul

After creating a consul cluster, you will find that despite that consul provides a set of features to manage registered services, it doesn’t provide a mechanism to register the services automatically with Consul.

So it will be a challenge to register services automatically with consul server, in this example created simple Flask application that registers itself with Consul on startup and a /healthcheck endpoint to monitor service health.

In later posts i will try to use a nice tool called Registrator created also by Jeff Lindsay, Registrator has the ability to automatically check for newly created containers and registers them to Consul, also it will remove the service from Consul when the container dies.

The python application will use python-consul library to connect to the Consul Container, the app uses a register function where the app registers itself with Consul:

[code]

def register():

ip = nic.ifaddresses(‘eth0’)[2][0][‘addr’]

c = consul.Consul(host=os.getenv("CONSUL_IP"), port=int(os.getenv("CONSUL_PORT")))

s = c.agent.service

s.register("Python_app", service_id=socket.gethostname(), address=ip, port=5000, http="http://"+ip+":5000/healthcheck", interval="10s", tags=[‘python’])

[/code]

The application will use two environment variables (CONSUL_IP, and CONSUL_PORT), after that it registers a “python_app” service and adds a HTTP health check to check for the health of the service.

Building The Python Docker Image

The Docker image will install the necessary tools for running the python application including python, python-pip, and virtualenv, also it define a GITHUB_REPO to be cloned later:

[code]FROM ubuntu:14.04

MAINTAINER Hussein Galal

ENV GITHUB_REPO=https://github.com/galal-hussein/pythonapp-consul.git

RUN apt-get update

RUN apt-get install -y python python-dev python-pip python-virtualenv python-setuptools

RUN apt-get install -y git build-essential

RUN mkdir -p /var/www

ADD run.sh /tmp/run

RUN chmod a+x /tmp/run

WORKDIR /var/www

EXPOSE 5000

ENTRYPOINT /tmp/run[/code]

The Dockerfile exposes the port 5000 and adds the run.sh script:

[code]

#!/bin/bash

git clone $GITHUB_REPO site

cd site && virtualenv venv && . venv/bin/activate && pip install -r requirements.txt

python app.py runserver[/code]

The next step is to build and push the Docker image to be used later in the example:

[code]

$ docker build -t husseingalal/pythonapp .

$ docker push husseingalal/pythonapp

[/code]

For the sake of simplicity i will create the containers locally, and the Docker containers will register themselves with their private IP and later in the example we will use consul-template with nginx to bounce the requests between the application containers.

Setting up Nginx with consul-template

Before launching the service containers, i will start nginx container with consul-template daemon which queries the Consul instance and update a specified template on the file system, also it can run arbitrary commands like restarting the Nginx service:

[code]$ apt-get update && \

apt-get install -y nginx golang && \

export GOPATH=/usr/share/go

$ git clone https://github.com/hashicorp/consul-template.git

$ cd consul-template

$ make

[/code]

Then we will create a nginx template to be used to update the Nginx configuration according to the healthy services in Consul:

/tmp/site.ctmpl:

[code highlight=”2,3″]

upstream backend {

{{range service "Python_app" "passing"}}

server {{.Address}}:{{.Port}};{{end}}

}

server {

listen 80 default_server;

root /usr/share/nginx/html;

index index.html index.htm;

server_name localhost;

location / {

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $remote_addr;

proxy_set_header Host $host;

proxy_pass http://backend;

try_files $uri $uri/ =404;

}

}

[/code]

We can now start the consul-template tool to keep an eye on Consul and update the template whenever a new service registered with Consul:

[code]

$ sudo bin/consul-template -consul=178.62.122.178:8500 \

-template "/tmp/site.tmpl:/etc/nginx/sites-available/default:service nginx restart" \

-log-level=debug

[/code]

At first the previous command will obviously fail to start nginx because we didn’t start any services yet, so now its time to launch the app containers:

[code]

$ sudo docker run -d -e <b>CONSUL_IP</b>=178.62.122.178 \

-e <b>CONSUL_PORT</b>=8500 –name app1 -P -h app1 husseingalal/pythonapp

[/code]

It doesn’t matter that we didn’t map the port 5000 to a recognizable port at the host because we already using the private ip for the container and the application designed to register itself with its private ip, but in real world production example this will change according to the proposed setup.

[code highlight=”3″]

$ sudo docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

eaa54916eda2 husseingalal/pythonapp:latest "/bin/sh -c /tmp/reg 2 seconds ago Up 2 seconds 0.0.0.0:32784->5000/tcp app1

61218c640e9c progrium/consul:latest "/bin/start -server 2 hours ago Up 2 hours 0.0.0.0:53->53/udp, 0.0.0.0:8300-8302->8300-8302/tcp, 0.0.0.0:8400->8400/tcp, 0.0.0.0:8301-8302->8301-8302/udp, 53/tcp, 0.0.0.0:8500->8500/tcp consul_s1

[/code]

Now the consul-template will query the consul server and will update the template and restart Nginx:

[code]

…..

2015/05/12 20:40:13 [DEBUG] ("service(Python_app [passing])") querying Consul with &{Datacenter: AllowStale:false RequireConsistent:false WaitIndex:753 WaitTime:1m0s Token:}

2015/05/12 20:40:13 [DEBUG] (runner) checking ctemplate &{Source:/tmp/site.tmpl Destination:/etc/nginx/sites-available/default Command:service nginx restart}

2015/05/12 20:40:13 [DEBUG] (runner) wouldRender: true, didRender: true

2015/05/12 20:40:13 [DEBUG] (runner) appending command: service nginx restart

2015/05/12 20:40:13 [INFO] (runner) diffing and updating dependencies

2015/05/12 20:40:13 [DEBUG] (runner) "service(Python_app [passing])" is still needed

2015/05/12 20:40:13 [DEBUG] (runner) running command: `service nginx restart`

* Restarting nginx nginx [ OK ]

2015/05/12 20:40:15 [INFO] (runner) watching 1 dependencies

…..[/code]

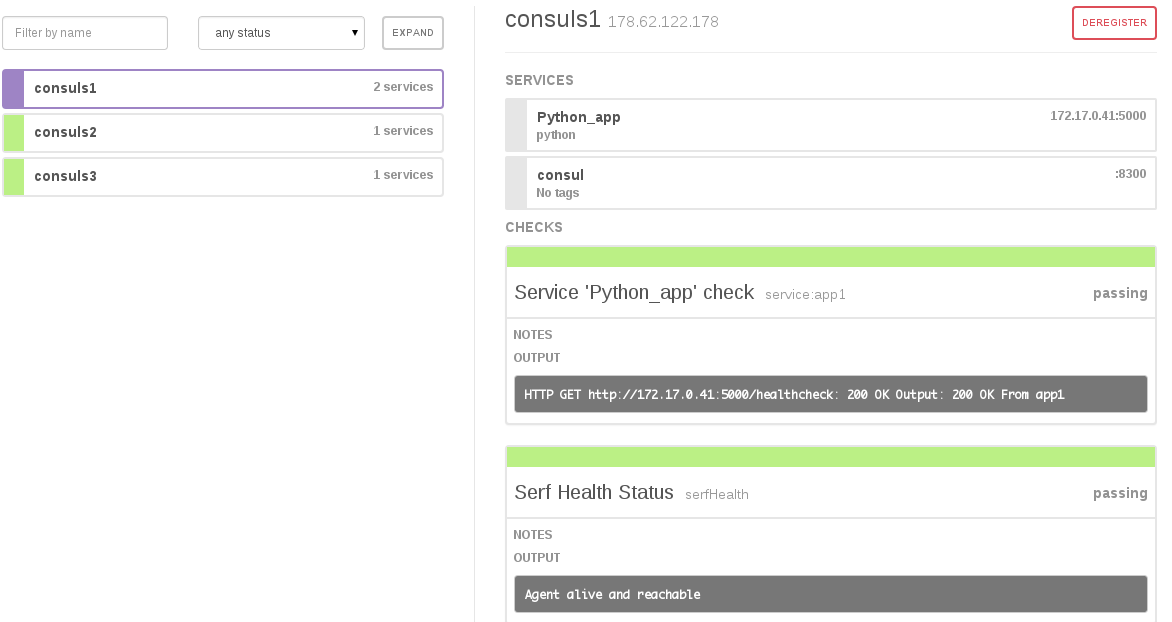

And we can see that the service is registered successfully and healthy:

Similarly, we can run multiple app servers in the same way we ran app1, and every time consul-template will restart nginx with the new configuration:

[code]

$ sudo cat /etc/nginx/sites-available/default

upstream backend {

server 172.17.0.43:5000;

server 172.17.0.44:5000;

server 172.17.0.45:5000;

}

….

[/code]

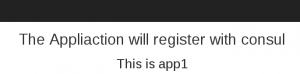

And if you access the load balancer:

What’s next

Service Discovery plays a very essential role in every distributed and scalable environment, in this post we discussed briefly how to register services manually through the application itself, there are a lot more on this particular topic, in the next post(s) we will be discussing using the Key/Value store as a shared configuration store, and deregistering the services when they fail, also using registrator with consul to automatically register and deregister the different services, but more on that later. Stay tuned!