02 Jul 2015 Building an automatic environment using Consul and Docker – Part 2

In the previous post, we talked about service discovery and how it become an important component of modern environments, and we tried to set up a Consul cluster and to register/deregister a python application with Consul and saw how to use Consul-template to update the configuration for the load balancer to include new app server.

The problem with the previous setup is that every service in the environment has to find a way to register itself with the service discovery tool, this will be very painful and hard to maintain if you are working with multi-tier systems with multiple services.

In this post I will use a new tool (registrator) to decouple the registration part from the service, and will use Docker Swarm and Docker Compose to set up a cluster of Docker daemons and automate the setup.

Registrator

Registrator is yet another great tool developed by Jeff Lindsay, it uses the ports published by Docker containers and its metadata to register them with multiple supported service discovery tools like Consul, etcd, and SkyDNS 2.

Registrator waits for new containers to run and register them based on their published ports and a set of environment variables that represent the container’s metadata, also it will remove the container from the service directory when the container dies. Check out the main repository, for more information on Registrator.

Docker Swarm

Docker swarm is an orchestrating and clustering tool for Docker that will set up a cluster of Docker daemons on different hosts, they achieve that by using a service discovery backend to be aware of the registered daemons, swarm supports multiple backend including Zookeeper, etcd, and Consul which we will use in our example.

The Swarm hosts will be managed by using swarm manager which can be any machine that can reach the service discovery backend that manages the cluster and the Docker daemons on different nodes. For more information about Docker Swarm.

Seeing the Big Picture

The point of adding more tools to the setup is to add more automation and production sense in building a fully distributed system powered by Docker.

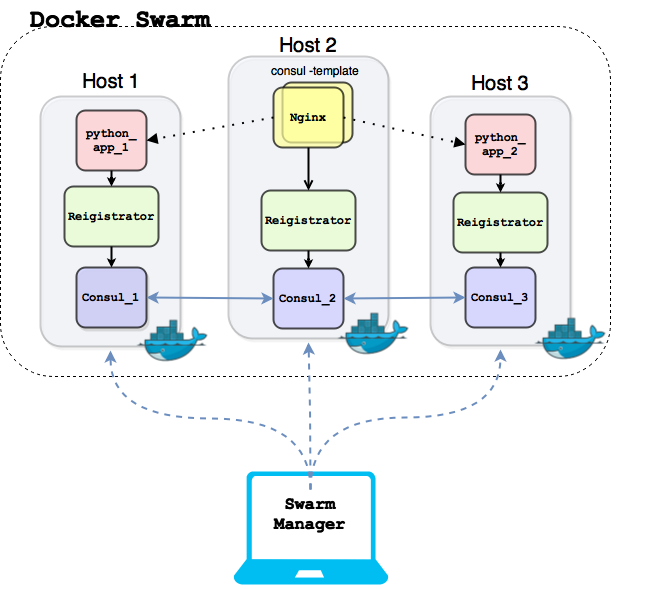

To imagine the final image of the build, I drew a small diagram to help figuring out all of the setup components and how they interact with each other:

The setup consists of:

- Three cloud instances, each machine has Docker 1.6.2 installed and listening on both unix (/var/run/docker.scok) and tcp socket (tcp://0.0.0.0:2375).

- Three Consul Docker containers that will run on the hosts where there ports exported to public interface of each machine in order to be managed by Swarm manager later.

- Each Docker will register itself with consul using swarm tool, which will run also as a container on each host but will be removed after registration.

- The Registrator Docker container which will be responsible for registering the Docker containers with consul and deregistering them if they die.

- Two python app containers which we created in the last post, the two containers will be registered automatically with Consul using the previous created registrator container.

- On the second host i will install Nginx also using Docker (different from the last post) and will run consul-template along with Nginx to make sure that it’s updated when a new app is added or removed.

I will be running Swarm manager on my own laptop which will communicate with any of the consul hosts to query the swarm hosts and be able to launch container on the cluster.

Important Note

In order for the swarm manager to communicate with the swarm nodes, The Docker daemons and consul will be publicly accessible, this is not a scenario for a real production environment, in a real production environments TLS authentication must be enabled on Docker daemon and also between the manager and the service discovery backend.

Enough explanation, let’s dive into building the setup.

Docker Configuration

Of course that’s go without saying that Docker must be installed on the three machines, but a few additional options must be added to each Docker daemon:

[code]

node1# vim /etc/default/docker

DOCKER_OPTS="-H tcp://0.0.0.0:2375 -H unix:///var/run/docker.sock \

label spirula.role=app_1"

node2# vim /etc/default/docker

DOCKER_OPTS="-H tcp://0.0.0.0:2375 -H unix:///var/run/docker.sock \

label spirula.role=app_2"

lb# vim /etc/default/docker

DOCKER_OPTS="-H tcp://0.0.0.0:2375 -H unix:///var/run/docker.sock \

label spirula.role=lb"

[/code]

The label is used by Docker swarm to run a Docker container on specific host this enables the filter feature for Docker swarm.

The Swarm

In the last post we discussed how to get a consul cluster up and running so we will not repeat those steps in this post but there is an important note here each consul container must be run with special env -e SERVICE_IGNORE=1 which will cause the registrator to ignore the entire consul container including the exposed ports, we will see how to register the nodes as a part of the Swarm cluster, first make sure that the consul cluster is up and running:

[code]

node1:~# curl localhost:8500/v1/catalog/nodes

[

{"Node":"consul_1","Address":"188.166.106.100"},

{"Node":"consul_2","Address":"188.166.26.156"},

{"Node":"consul_3","Address":"178.62.194.206"}

]

[/code]

To register each node with the consul you should specify the consul target and the corresponding path that will save the data of the hosts, on each host run the following:

[code]

node1:~# export CONSUL_LEADER=188.166.106.100

node1:~# export NODE1=188.166.106.100

node1:~# export NODE2=188.166.26.156

node1:~# export LB=178.62.194.206

node1:~# docker run -d swarm join –addr=$NODE1:2375 consul://$CONSUL_LEADER:8500/swarm

[/code]

The swarm tool register itself with Consul discovery by adding a record for the registered node in Consul’s key/value store, to make sure it’s added, we can use the following query:

[bash]

node1:~# curl -s http://$CONSUL_LEADER:8500/v1/kv/swarm?recurse \

| python -m json.tool

[

{

"CreateIndex": 36,

"Flags": 0,

"Key": "swarm/178.62.194.206:2375",

"LockIndex": 0,

"ModifyIndex": 88,

"Value": "MTc4LjYyLjE5NC4yMDY6MjM3NQ=="

},

{

"CreateIndex": 14,

"Flags": 0,

"Key": "swarm/188.166.106.100:2375",

"LockIndex": 0,

"ModifyIndex": 87,

"Value": "MTg4LjE2Ni4xMDYuMTAwOjIzNzU="

},

{

"CreateIndex": 28,

"Flags": 0,

"Key": "swarm/188.166.26.156:2375",

"LockIndex": 0,

"ModifyIndex": 86,

"Value": "MTg4LjE2Ni4yNi4xNTY6MjM3NQ=="

},

{

"CreateIndex": 13,

"Flags": 0,

"Key": "swarm/",

"LockIndex": 0,

"ModifyIndex": 35,

"Value": null

}

]

[/bash]

Swarm manager

The swarm manager can be any device that can reach the Docker nodes and the Consul backend, and as mentioned before for the sake of simplicity we won’t discuss using TLS authentication for communication in this example, which is a must if you are willing to do similar setups in real production environment.

To run swarm manager on your personal computer, install Swarm tool or in my case make sure that you have docker installed and run the following:

[code]

hussein@ubuntu:~$ export CONSUL_LEADER=188.166.106.100

hussein@ubuntu:~$ export NODE1=188.166.106.100

hussein@ubuntu:~$ export NODE2=188.166.26.156

hussein@ubuntu:~$ export LB=178.62.194.206

hussein@ubuntu:~$ docker run -d –name swarm_manager -p 2375:2375 swarm manage -H tcp://0.0.0.0:2375 consul://$CONSUL_LEADER:8500/swarm

5e0ba5d871250c92350c0520caf02fafe623808f156ef79deefd233cc0d28e58

[/code]

This means that the manager will run on port 2375 on your local machine, to list the info about all machines in your swarm, use docker info:

[code]

hussein@ubuntu:~$ docker -H tcp://localhost:2375 info

Containers: 6

Strategy: spread

Filters: affinity, health, constraint, port, dependency

Nodes: 3

lb: 188.166.26.156:2375

└ Containers: 2

└ Reserved CPUs: 0 / 1

└ Reserved Memory: 0 B / 514.5 MiB

node1: 188.166.106.100:2375

└ Containers: 2

└ Reserved CPUs: 0 / 1

└ Reserved Memory: 0 B / 514.5 MiB

node2: 178.62.194.206:2375

└ Containers: 2

└ Reserved CPUs: 0 / 1

└ Reserved Memory: 0 B / 514.5 MiB

[/code]

This is awesome! I can now create, start, list, stop, and remove containers directly from my laptop. Now let’s explore how to start our application using registrator.

Starting The App

since there is no need now to login to each server and start the desired container, we will start the containers from one place, here we will use the labels that we used to start the Docker daemon as filters on which container will be running on which host.

The registrator container will be running on all hosts though, but unfortunately there is no option for Global scheduling in docker swarm yet, but it’s planned for swarm 0.3:

[code]

hussein@ubuntu:~$ docker -H tcp://localhost:2375 run -d \

-e "constraint:role==app_1" \

–name registrator_1 \

-v /var/run/docker.sock:/tmp/docker.sock \

-h registrator_1 \

gliderlabs/registrator consul://$NODE1:8500

[/code]

Well that’s seems complicated, let’s figure out what this command do exactly, first it connect to the swarm manager using -H tcp://localhost:2375 and then it runs the registrator container but with a very important environment variable -e “constraint:role==app_1” which if you recall is the constraint we add on node1 which means this container must be created only on node1, and finally it specify the consul target consul://$NODE1:8500 which it will use later to register the docker containers.

similarly we will use the same command on the other two hosts:

[code]

hussein@ubuntu:~$ docker -H tcp://localhost:2375 run -d \

-e "constraint:role==app_2" \

–name registrator_2 \

-v /var/run/docker.sock:/tmp/docker.sock \

-h registrator_2 \

gliderlabs/registrator consul://$NODE2:8500

[/code]

[code]

hussein@ubuntu:~$ docker -H tcp://localhost:2375 run -d \

-e "constraint:role==lb" \

–name registrator_3 \

-v /var/run/docker.sock:/tmp/docker.sock \

-h registrator_3 \

gliderlabs/registrator consul://$LB:8500

[/code]

Now Registrator is up and running on all three machines, so we need to start our application container on both node1 and node2:

[code]

hussein@ubuntu:~$ docker -H tcp://localhost:2375 run -d \

-e "constraint:role==app_1" \

-h app1 \

–name python_app1 \

-p 5000:5000 \

-e "SERVICE_NAME=Python_app" husseingalal/pythonapp2

[/code]

[code]

hussein@ubuntu:~$ docker -H tcp://localhost:2375 run -d \

-e "constraint:role==app_2" \

-h app2

–name python_app2 \

-p 5000:5000 \

-e "SERVICE_NAME=Python_app" husseingalal/pythonapp2

[/code]

The previous commands will run the python application on node1 and node2 and this event will trigger registrator on each host and they will be registered automatically with consul, to make sure that the services are registered with Consul, you can query the HTTP API:

[bash]

hussein@ubuntu:~$ curl http://$CONSUL_LEADER:8500/v1/catalog/service/Python_app | python -m json.tool

[

{

"Address": "188.166.106.100",

"Node": "consul_1",

"ServiceAddress": "",

"ServiceID": "registrator_1:python_app1:5000",

"ServiceName": "Python_app",

"ServicePort": 5000,

"ServiceTags": null

},

{

"Address": "188.166.26.156",

"Node": "consul_2",

"ServiceAddress": "",

"ServiceID": "registrator_2:python_app2:5000",

"ServiceName": "Python_app",

"ServicePort": 5000,

"ServiceTags": null

}

]

[/bash]

The Load Balancer

Almost done, the final step here is to run Nginx container along with Consul-template container to update the configuration of nginx when any new app starts or any existing app dies:

[code]

hussein@ubuntu:~$ docker -H tcp://localhost:2375 run -d \

-e "constraint:role==lb" -d –name nginx -p 80:80 \

-v /etc/nginx/conf.d/ nginx

[/code]

This will run a standard nginx container with port 80 exported, which will also be registered with consul, the /etc/nginx/conf.d directory is exported as a volume because it will be used with consul-template container to deploy the template there.

I will be using avthart’s consul-template image to run the consul-template tool, but before running the consul template container, the nginx.ctmpl file which we created in the previous post will be put in /tmp/ directory into the the load balancer machine.

Now the consul-template container will run as following:

[code]

hussein@ubuntu:~$ docker -H tcp://localhost:2375 run -d \

-e "constraint:role==lb" \

–name nginx-consul-template \

-e CONSUL_TEMPLATE_LOG=debug \

-v /var/run/docker.sock:/tmp/docker.sock \

-v /tmp/nginx.ctmpl:/tmp/nginx.ctmpl \

–volumes-from nginx avthart/consul-template \

-consul=$CONSUL_LEADER:8500 \

-wait=5s \

-template="/tmp/nginx.ctmpl:/etc/nginx/conf.d/default.conf:/bin/docker kill -s HUP nginx"

[/code]

Yeah, thats a very long command but it’s really simple, it will run the container and will use the same volumes of the nginx container and specify the consul target to and it will keep checking every 5 seconds and finally will specify the template which will be the /tmp/nginx.ctmpl file and if any changes happened to the “Python_app” service it will reflect on the configuration of nginx container.

Testing

The setup should be completed by now and all the services are synced with Consul, if we created a new application container the steps will go like this:

- Creating a new python_app container using the swarm manager.

- The container will be created on the specified node.

- Registrator container on this node will register the new container with consul

- Consul-template container on the lb node is regularly checking for new updates on the “Python_app” service, it will update the nginx configuration file which is shared between consul-template container and the nginx container.

- Nginx container will add the new container to the pool of backend servers.

[code]

hussein@ubuntu:~$ docker -H tcp://localhost:2375 run -d \

-e "constraint:role==app_1" \

-h app3 \

–name python_app3 \

-p 5001:5000 \

-e "SERVICE_NAME=Python_app" husseingalal/pythonapp2

[/code]

[bash]

hussein@ubuntu:~$ curl -s http://$CONSUL_LEADER:8500/v1/catalog/service/Python_app | python -m json.tool

[

{

"Address": "188.166.106.100",

"Node": "consul_1",

"ServiceAddress": "",

"ServiceID": "registrator_1:python_app1:5000",

"ServiceName": "Python_app",

"ServicePort": 5000,

"ServiceTags": null

},

{

"Address": "188.166.106.100",

"Node": "consul_1",

"ServiceAddress": "",

"ServiceID": "registrator_1:python_app3:5000",

"ServiceName": "Python_app",

"ServicePort": 5001,

"ServiceTags": null

},

{

"Address": "188.166.26.156",

"Node": "consul_2",

"ServiceAddress": "",

"ServiceID": "registrator_2:python_app2:5000",

"ServiceName": "Python_app",

"ServicePort": 5000,

"ServiceTags": null

}

]

[/bash]

Now if you access the load balancer’s ip:

[code]

~# curl -s http://188.166.26.156/

This is app2

~# curl -s http://188.166.26.156/

This is app1

~# curl -s http://188.166.26.156/

This is app3

[/code]

What’s Next

Building a functional distributed systems is not an easy job, it requires a lot of work and concentration to get it right, a different tools and components can be used together to build it like we’ve seen in this post. In the following post we will try to use Docker Compose to automate the build of this distributed system and hopefully to simplify the whole process.