23 Jun 2016 Disaster recovery solution Case study

One of our clients who is one of the pioneers in the Egyptian tourism industry. As part of their expansion plan, they established an internal software division, that provide SAAS applications to their client they faced a problem in their hosting model which was not reliable enough, and can seriously impact their service continuity.

From the first look, we figured that the physical machine they were using was not going to hold. Even if the hardware was working properly -which was not the case- and its specification was suitable to hold the required load, it was still a single-point-of-failure (SPOF) that has to be avoided.

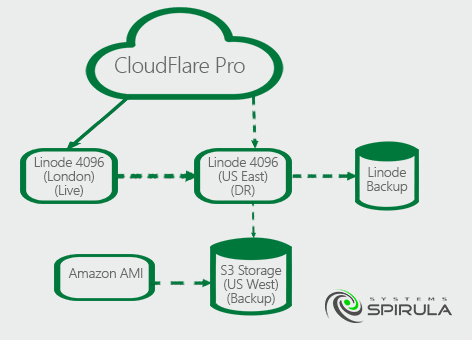

To meet their availability needs, we implemented a multi-tier Disaster Recovery solution. The solution provided an active-passive geographically distributed system, with a cold site on another provider’s infrastructure to ensure availability even if the first provider failed in all its geographical locations.

Implementation of the solution included configuring a complex database and file system replication setups and implementing an automated multi-stage service switching mechanism to ensure there is no data loss during the service switch. Using an external service to provide reverse proxy mapping to the system to avoid DNS propagation delays, we were able to achieve and sustain a recovery time of under 2 minutes. The end result was very reliable and fault tolerant SAAS platform.

Our client’s team was able to use the DR system to rapidly update and improve their service, perform regular maintenance, and at the same time, maintain a 99.97% availability record.

problem

- As the old structure consisted of a single hardware server, the problem consisted of four main parts:

-

- Hardware unreliability.

- Having the whole system on a single point of failure, which could cause system unavailability or even data loss.

- Migration to another server would cause considerable down time due to DNS propagation.

- The structure wasn’t horizontally scalable, and vertical scalability required considerable down time

Solution

-

- Overview

- The solution was made to eliminate the old server’s problems with

having cost effectiveness in mind.

- The solution was made to eliminate the old server’s problems with

- Overview

-

- Advantages

- Having three different geographical locations spread across two continents (Europe, US East, and US West) ensures high resilience for natural disasters.

- Spreading the system across two different providers, ensures that no business related issues with the provider can affect the business continuity.

- The structure is totally redundant without any single point of failure.

- Switching to DR site doesn’t need waiting for DNS propagation, as it happens almost instantaneously via CloudFlare.

- Both horizontal and vertical scalability could be done with zero down time, as it’s always doable to depend on the DR site while upgrading or modifying the main site, and vice versa.

- Advantages

-

- Limitations

- Switching to the DR site is done manually although it could be hooked to a monitoring system, as false positives in such systems happen and could lead to unneeded switching. This can be overcomed by connecting it to a multi-geographical location HTTP monitoring, but it will increase the running cost.

- After switching to DR site, rolling back is not an automated procedure. As checking the database syncing and confirming the health of the main site’s database is manual.

- The second tier DR is not synced in real time as the DR site, as depends on restoring the latest daily backup. When switching to the second tier DR, a data loss since the time of the last backup is expected and non-recoverable.

- Limitations

- Components

i. Main site

- The main site consisted of a single cloud instance in London, UK. The site will handle all the load and serve as a source for the data to replicated to the DR site.

- The traffic did not hit the site directly. It was directed through CloudFlare to facilitate the site switch without having to wait for DNS propagation.

ii. DR site

- The DR site is a cloud instance of the same size as the main, located in US East – with the same provider – to ensure geographical distribution. The site acts as a slave for database and file system synchronization from the main site. During normal operations, this site is in standby mode.

- Additionally, this site acts as the source of on-site backup on Linode, and the source for an off-site backup on AWS infrastructure.

iii. Backup and Backup restoration automation

- This consists of a pre-configured AMI holding the configuration needed to operate another DR site. This site is used as a 2nd level of redundancy, to launch the service using the last available backup. Loading the last backup is automated upon launching the AMI.

Implementation procedure

a.Old server mapping

the first step was the analysis and documentation of the current site setup and configuration. This included removing any dependency on CPanel as it can’t be used on the new system.

b. DevOps Automation

Creating an Ansible Playbook to create the environment automatically on any server is needed. This is used in creating the Main and DR sites, and is maintained afterwards to be ready to create or update any environment to be identical with the main site.

c. Main site implementation

The actual implementation and final configuration of the main site was made using the Ansible playbook created in the last step.

d. DR site and backup implementation.

The implementation of the DR site using the Ansible Playbook. This also included the data synchronization and replication setup between the Main and DR sites. Also the configuration of several automated

backup processes, backup testing, and backup monitoring.

e. Second Tier DR procedure

Utilizing the Ansible Playbook and the backup, a server image was to be created on another provider’s infrastructure. additionally, an automated process to utilize the backup taken from the DR site launch the service would have been created. This part of the project wasn’t implemented upon the client request for cost saving concerns.

f. Testing and configuration verification.

Both Spirula Systems and Memphis teams worked together to test and

verify the system configuration and proper operation of the system on

both main and DR sites. This also included testing the failover and

recovery procedure.

g. Service Switch

The new main site was introduced into production. That required a

scheduled downtime to ensure data consistency, as the old structure

didn’t allow zero downtime migration.

h. Old servers decommissioning

The old server was fully backed up. Services stopped and the server remained online for few days to make sure there were no hidden service dependencies on it. Once that’s confirmed, the server was powered off and decommissioned.

i. On-site training

After the solution implementation completed, Spirula’s representative gave a training and hand over for the system to our client’s staff. The training was 6 hours only as it assumed for the one who receives it a basic understanding of Linux system administration. It took place on our client , and focused on the following points:

- Solution architecture.

- Overview of the installed components and services.

- Basic explanation about the used scripts and configurations.

- Paths of the scripts and configurations.

- Common modifications on the scripts and configurations.